This chapter covers

- Gaining an overview of OpenUSD

- A high level look at OpenUSD’s core features.

- Exploring OpenUSD’s impact, trends, challenges, and opportunities

- Examining some examples of OpenUSD in One Weekend

- A glimpse into the potential future of OpenUSD

Though Universal Scene Description (USD) was initially developed in 2012 by Pixar to streamline production of its animated movies, the practical applications of USD have proved to extend well beyond their industry. Pixar created USD to synchronize, and manage large and complex 3D scenes that required input from multiple artists concurrently. In 2016, Pixar open-sourced USD, sparking interest across the many industries beginning to explore the potential of 3D graphical applications. Because of its open-source nature, it is now called OpenUSD.

Recognizing OpenUSD’s potential, The Alliance for OpenUSD (AOUSD) formed in 2023 specifically to promote interoperability across all forms of 3D content. Central to that aim is the standardization of OpenUSD’s specifications and the development of new functionality to add to any existing implementations in Pixar’s original repository. As well as Pixar themselves, the AOUSD has many large organizations as members, including Adobe, Apple, Autodesk, NVIDIA, Intel, Epic Games, Siemens, Meta, and Sony, amongst others and the list is continuously growing.

OpenUSD is often described as a 3D file format, but this description only begins to capture its capabilities. OpenUSD is a comprehensive framework with extensive tools for creating, editing, and managing 3D scenes, specifically designed for handling 3D data with efficiency, interoperability, flexibility, and scalability. Together, these features enable smooth collaborative workflows with non-destructive editing, optimized performance, scalability, and high-quality real-time rendering.

The interoperability and extensibility of OpenUSD enable developers to create their own custom schemas and plugins making it highly flexible and applicable in a wide variety of settings. The adaptability engendered by C++ and Python APIs has made it a perfect tool for developers and creatives working in diverse arenas including manufacturing, science, robotics, entertainment, and art.

OpenUSD integrates with many 3D Graphical User Interfaces (GUIs), but its full potential is unlocked through scripting. A key application is building dynamic, interactive 3D scenes that respond to external data—tasks that go beyond what GUIs can achieve and require scripting, much like developing a game or website requires scripting. This book focuses on interacting with OpenUSD’s framework through Python scripting in a command-line environment. It equips developers and technical creatives with fundamental tools to use Python scripts for manipulating the framework, while illustrating the unique structure of OpenUSD stages.

Understanding OpenUSD’s full potential requires exploring the context in which it is designed to operate. To set the stage, we will begin with a high-level overview of OpenUSD, the challenges it was designed to address, and its far-reaching impact on the industries incorporating it into their workflows and pipelines.

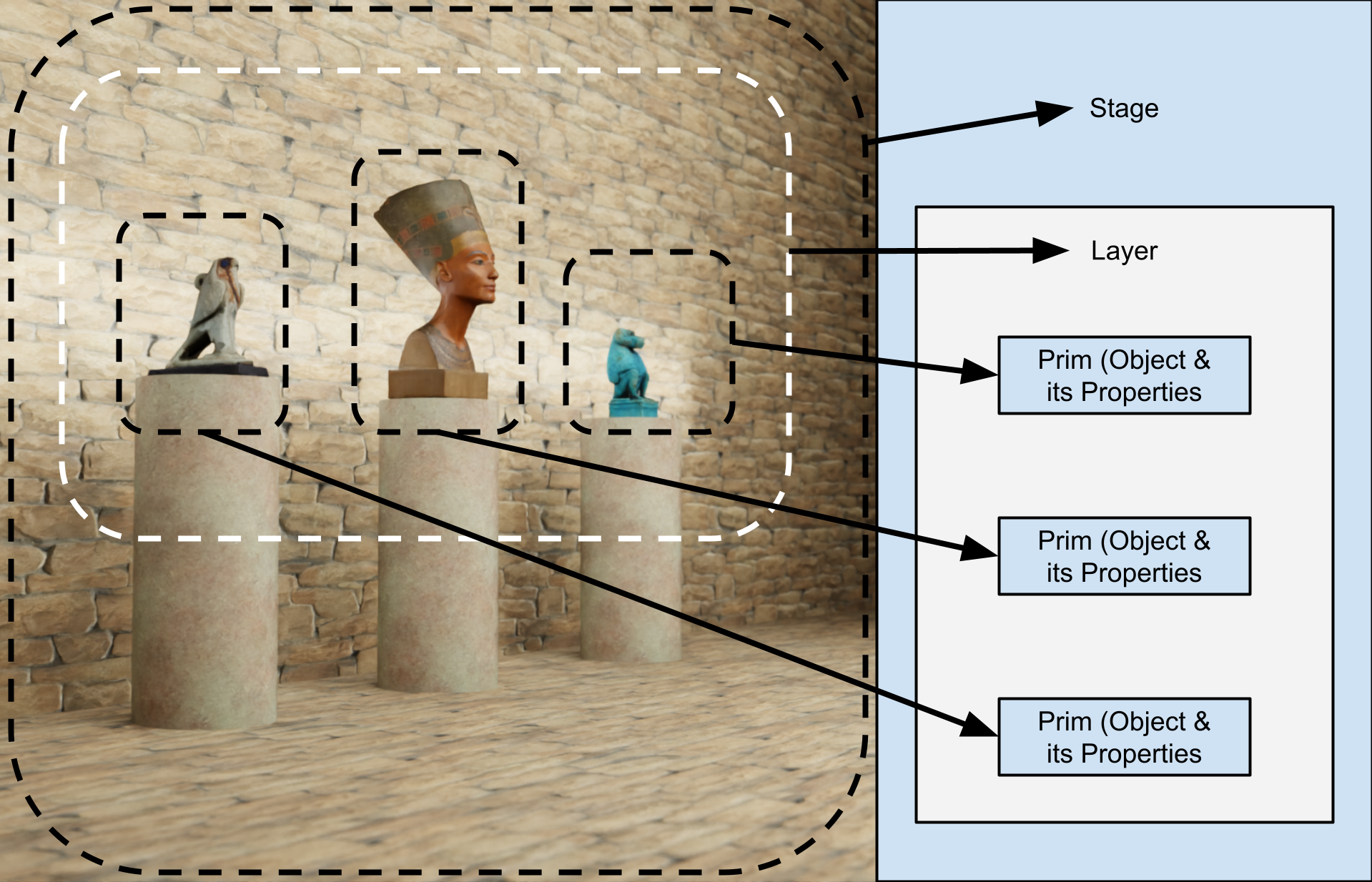

Figure 1:Introducing the basic structure of a USD scene showing a stage containing a single layer and some prims.

1.1 Why Choose OpenUSD?¶

The future of the internet may well be in 3D. Today, we navigate the web and connect with each other via interactive web pages, however we are likely to see that change in the future to an internet of connected 3D or ‘virtual’ spaces. Collectively, these kinds of spaces have been dubbed as ‘The Metaverse’, and they are already being widely used for entertainment. They are also increasingly being applied in industry, where they are becoming known as the industrial metaverse. Whatever form the mateaverse takes, to function well it requires efficient handling of data and good interoperability across multiple software platforms and file formats. This is what OpenUSD excels at.

OpenUSD’s particular strengths position it well as a major foundation of those industries and sciences beginning to utilize virtual space. Therefore, learning how to interact with the OpenUSD framework via scripting offers substantial benefits in modern 3D design and production workflows.

Firstly, OpenUSD offers a unified framework that streamlines 3D design pipelines, fostering efficient workflows and seamless, concurrent collaboration on complex projects. Its modular design supports iterative development, allowing team members to work on individual components without disrupting the overall project. This non-destructive approach lets contributors focus on their expertise while ensuring compatibility within the broader framework.

Secondly, OpenUSD simplifies the digitalization of 3D pipelines through accessible programming and efficient data management, enabling developers to automate repetitive tasks, manage large datasets effortlessly, and maintain consistency across production stages.This approach boosts productivity, reduces errors and redundancies, and delivers higher-quality outputs. Additionally, OpenUSD’s flexibility supports various industry standards, ensuring smooth integration with existing tools and technologies to streamline the 3D production process.

Lastly, integrating AI-empowered toolkits into 3D production through OpenUSD opens up new possibilities for innovation. AI can enhance various aspects of 3D design, such as content generation, asset optimization, and rendering improvement. This combination between AI and OpenUSD empowers developers and creatives to push the boundaries of what’s possible in 3D spaces.

As the demand for high-quality 3D content grows, mastering OpenUSD will very likely become an invaluable skill, positioning professionals at the forefront of technological advancements in the industry. Whether you wish to generate simulations based on real world data, create bespoke functionality for metaverse applications, develop collaborative workspaces for multiple users, or begin to integrate AI into existing 3D workflows and pipelines, learning to work with OpenUSD will provide the tools you require. There will be many more superficial ways to work with OpenUSD through various GUIs allowing varying degrees of control, however, it is only by utilizing OpenUSD via scripting that you will be able to take advantage of its maximum potential and flexibility.

1.2 Key Capabilities of OpenUSD¶

As 3D continues to expand across industries, developers and studios will face increasing demands to collaborate on larger, more complex, and detailed scenes and assets. This will require enhanced interoperability across platforms, along with more efficient workflows, data processing pipelines, and rendering processes. USD has made all of these things possible by placing itself at the center of a hub of software platforms and pre-existing file formats. It has achieved this by delivering the following key features:

The capability of ingesting data via APIs from multiple sources, encompassing most of the existing file formats commonly used by existing 3D software platforms, such as OBJ, GLB, FBX, and CAD formats.

The flexibility to allow custom-made schemas and plugins, making it capable of expanding to include sources less specific to 3D, such as CSV, JSON, and HDF5, giving it the potential for increased functionality beyond 3D use cases.

The efficiency and scalability derived from its hierarchical scene graph organization; sparse data representation using deltas to record only the differences between datasets without the need to duplicate them; instancing and referencing of assets to avoid redundant use of memory, in some cases, without fully loading these assets into the scene.

The ability to easily collaborate with others, if necessary in real-time, by allowing non-destructive editing via layering and versioning of a scene. This modular approach results in fewer iterative repetitions through any given workflow.

OpenUSD is to 3D graphics what HTML was to the web, replacing fragmented, proprietary formats with a unified standard. Its linking and layering capabilities parallel HTML’s modular structure, enabling seamless combination, reuse, and updates of 3D assets. This standardization unlocks new possibilities for collaboration via multiple software platforms, and innovation across industries. OpenUSD is becoming to 3D applications what JSON is to structured data — an interoperable, extensible format that supports complex scenes, but unlike JSON, it’s tailored for high-end production needs, where non-destructive workflows and layered data are critical. For this reason, USD will likely become the default file format used in the majority of 3D applications and production pipelines.

1.3 Examining the Core Features of OpenUSD¶

Let’s familiarize ourselves with the fundamental structure of any OpenUSD scene.

The Stage serves as the foundation of an OpenUSD scene, providing a workspace to view, edit, and save objects and properties. It acts as a container for the ‘Scene Graph’, a hierarchical structure that organizes all the elements of a scene. The Scene Graph defines how objects are arranged and related, simplifying the management of complex scenes and their properties. In essence, the scene graph provides the structure, while the stage is the environment where it exists and can be manipulated.

Layers are containers that store scene data, such as objects, their properties, and settings. Think of layers as like transparent sheets placed over each other, where each sheet adds more, or different detail to the final image without permanently altering what’s underneath. They can be stacked, combined, or overridden during composition to build a complete scene and can contain different ‘Opinions’ of how objects in the scene should look or behave. For example, one layer may have an object colored blue, while another layer has a different opinion, coloring it red. The layer that has highest priority will be the one represented in the final output. This facilitates non-destructive collaborative design by multiple users.

Primitives, referred to as Prims, are the basic building blocks of a scene. They represent objects or concepts, such as 3D models, cameras, lights, or groups, and can have properties like attributes (e.g., position, color) and hierarchical relationships with other prims.

To summarize:

- Stage: A loaded Scene Graph or workspace that loads and combines Layers to create a scene.

- Layer: Layers are combined to form a complete stage. A Layer is a container of Prims and Opinions. Each layer is typically an individual .usd file on disk.

- Prim (Primitive): The fundamental object in OpenUSD. There are various types of prims, such as geometry, lights, cameras, and shaders.

Figure 1 below illustrates the basic structure of an OpenUSD stage. It shows a stage containing only one layer. The data stored in this layer will include the prims and their properties. We will discuss stages containing multiple layers in more depth later.

1.3.1 Interoperability¶

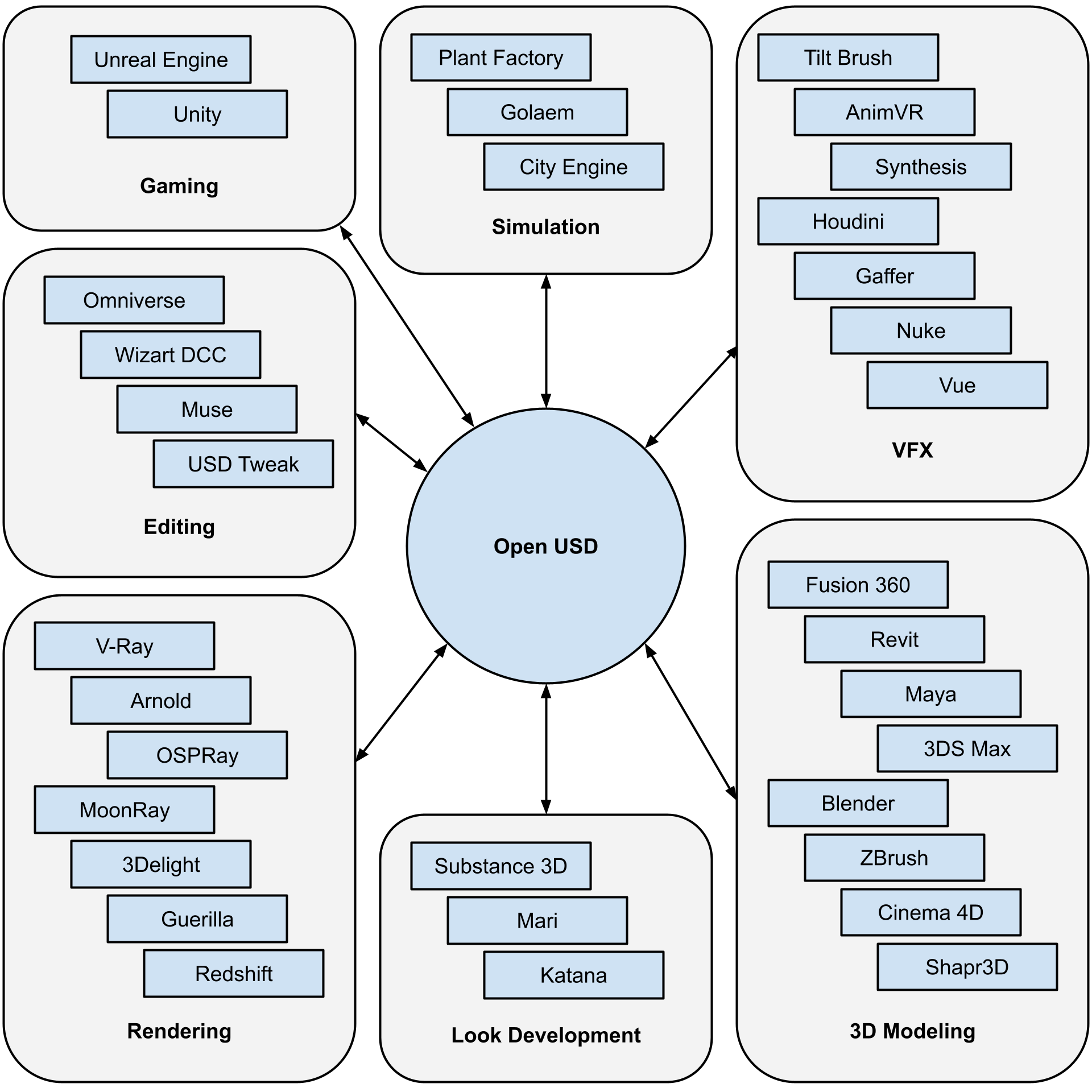

One of OpenUSD’s most significant advantages is its ability to facilitate seamless interchange between digital content creation tools, see Figure 2. This is achieved through its expanding number of schemas, which cover a wide range of domains including geometry, shading, lighting, and physics. By providing a common language and framework for these different domains, OpenUSD enables artists and technicians to work together more effectively, using their tool of choice without worrying about compatibility issues.

Figure 2:An array of platforms that work with OpenUSD demonstrating its extensive interoperability.

Because of its interoperability, OpenUSD is built to be highly extensible, featuring a robust plug-in system that enables developers to easily integrate custom schemas, file formats, and other extensions. This flexibility ensures that OpenUSD can be customized to adapt to any pipeline, seamlessly evolving to meet the changing needs of industry and staying ahead of emerging requirements.

1.3.2 Readability¶

OpenUSD prioritizes readability with a clear, intuitive architecture that simplifies development. Its modular design and well-documented APIs help developers quickly understand its structure and logic, enabling seamless integration into workflows, easy customization for specific needs, and efficient troubleshooting.

There is even a specific type of .usd file that is designed to be human readable, called a .usda file. This is a text-based format that stores scene data in a plain text file giving developers the ability to locate and fix errors without needing complex tools to parse binary data. This makes it an ideal choice for debugging, editing, and reviewing scene data, as well as for seamless collaboration with others.

Program 1 below, shows an example of a .usda file which explicitly describes a 3D cube, capturing its essential attributes, including its bounding extent, facial structure, vertex points, transformation data, and color information.

#usda 1.0

def Mesh "Cube"

{

float3[] extent = [(-1, -1, -1), (1, 1, 1)]

int[] faceVertexCounts = [4, 4, 4, 4, 4, 4]

int[] faceVertexIndices = [0, 1, 3, 2, 4, 6, 7, 5, 6, 2, 3, 7, 4, 5, 1, 0, 4, 0, 2, 6, 5, 7, 3, 1]

point3f[] points = [(-1, -1, 1), (1, -1, 1), (-1, 1, 1), (1, 1, 1), (-1, -1, -1), (1, -1, -1), (-1, 1, -1), (1, 1, -1)]

uniform token subdivisionScheme = "none"

double3 xformOp:rotateXYZ = (0, 0, 0)

double3 xformOp:scale = (1, 1, 1)

double3 xformOp:translate = (0, 0, 0)

uniform token[] xformOpOrder = ["xformOp:translate", "xformOp:rotateXYZ", "xformOp:scale"]

color3f[] primvars:displayColor = [(1, 0, 0)]

}Program 1:An example of .usda file

1.3.3 Composability and Composition Arcs¶

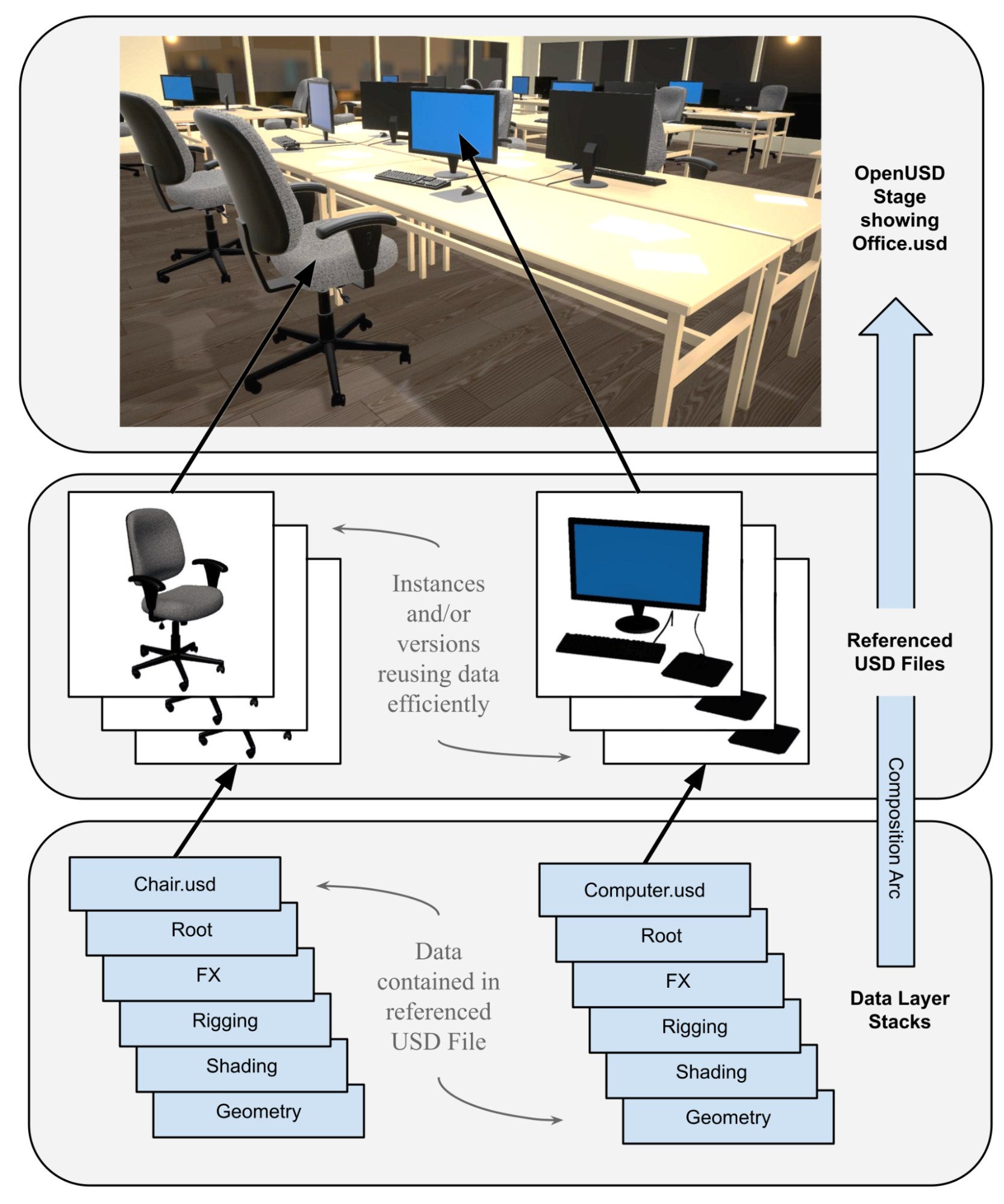

Open USD allows for a very modular structure when composing a scene. Data can flow from individual, smaller .usd files containing fully described items, such as objects, materials or lights, into larger, more complex scenes where the data can be efficiently expressed on the stage using multiple instances if necessary. The rules that govern this process are referred to as the Composition Arc.

A Composition Arc determines the flow of data from fundamental elements to the final scene in OpenUSD. They decide how objects and attributes are inherited, referenced, or overridden by prioritizing which layers and their opinions contribute to the final stage.

Composition arcs define the order and precedence used to resolve conflicts when multiple layers provide differing values for the same prim or attribute. This ensures that the most relevant or dominant opinions are used in the final composed scene. Think again of layers as transparent sheets, each altering or adding details to the layers below. It is the composition arc that determines which aspects of each layer are visible and how they interact, and integrate in the final scene.

Figure 3 shows an example of how separate .usd files that describe both an office chair and a computer are combined via a composition arc, and instanced on the ‘Office.usd’ stage to populate a scene of an open plan office.

Figure 3:An example of data flowing through composition arcs to populate a USD stage. The arc begins from individual .usd files which, in turn, are referenced and instanced in the final .usd file that describes the complete scene.

There are different types of Composition Arc serving different purposes and priorities. Much like layers, arcs with higher priority will override those with lower priority. The types of composition arc are named; Sublayers; References; Variants; Payloads; Inherits; and Specializes. They are evaluated in a specific order, known as LIVRPS (Local/Sublayers, Inherits, Variant Sets, References, Payloads, Specializes), to resolve the final values for attributes or metadata in a scene. This order ensures a consistent and logical approach to determining which data takes precedence during composition. Below is a definition of each type:

Sublayersenable the hierarchical organization of scene descriptions. They allow you to build complex scenes by stacking multiple layers on top of each other, where each subsequent layer can override or augment the data in the layers below it. References provide a mechanism for instancing and reusing assets within a scene. By referencing external assets, you can construct a scene using pre-built models, animations, and other components.Variantsprovide a way to define multiple configurations or states of an object within a scene. They allow you to create different setups for an asset, such as alternative textures, geometries, or properties, and switch between them as needed.Payloadsmanage optional, lazy-loaded data for efficient handling of large datasets, such as high-detail models. They allow efficient resource use by giving the user the option to load only the data relevant to their current task.Inheritsfacilitate inheritance of properties or attributes from other prims, akin to object-oriented programming. They allow for mass overrides on prims based on optionally allocated classes.Specializessimilar to Inherits, these allow the definition of customized properties for a prim by referencing another prim. Applying a Specializes Arc does not inherently override the prim’s existing attributes but augments them when no stronger opinions are present. AsSpecializesis the most difficult arc to understand, an example might be useful: A specializing prim could be created to describe a material that makes any object appear scratched. Making any prim inherit that specializing prim’s attributes will allow it to keep its existing attributes, but augment them with a scratched appearance. Imagine we have two red cubes on a stage, one of which looks new and the other inherits the specialized material and looks scratched. Now, at a higher level of priority on the stage both cubes could be changed to a blue color, but the specialized cube will continue to inherit the scratched look from its specialized composition arc, provided that too is not overridden at a higher level.

The use of various types of Composition Arc in OpenUSD allows users to efficiently construct and manage complex 3D scenes by assembling various assets and layers, promoting modularity and reusability. This capability greatly enhances collaboration and scalability in 3D content creation.

1.4 Exploration of USD’s Industry Impact¶

The beauty of the USD hub-like approach, positioning itself at the center of a network of other tools and data sources, is that creatives can work in the comfort of familiar territory, whether it be in Maya, Cinema 4D, Houdini or other 3D platforms, knowing that the results can be reliably transferred into a universal format for collaboration with others. Another bonus is that the collaboration can even happen concurrently, in real-time, where previously such teamwork could involve waiting for one stage of production to be completed before the next could begin.

1.4.1 Collaboration¶

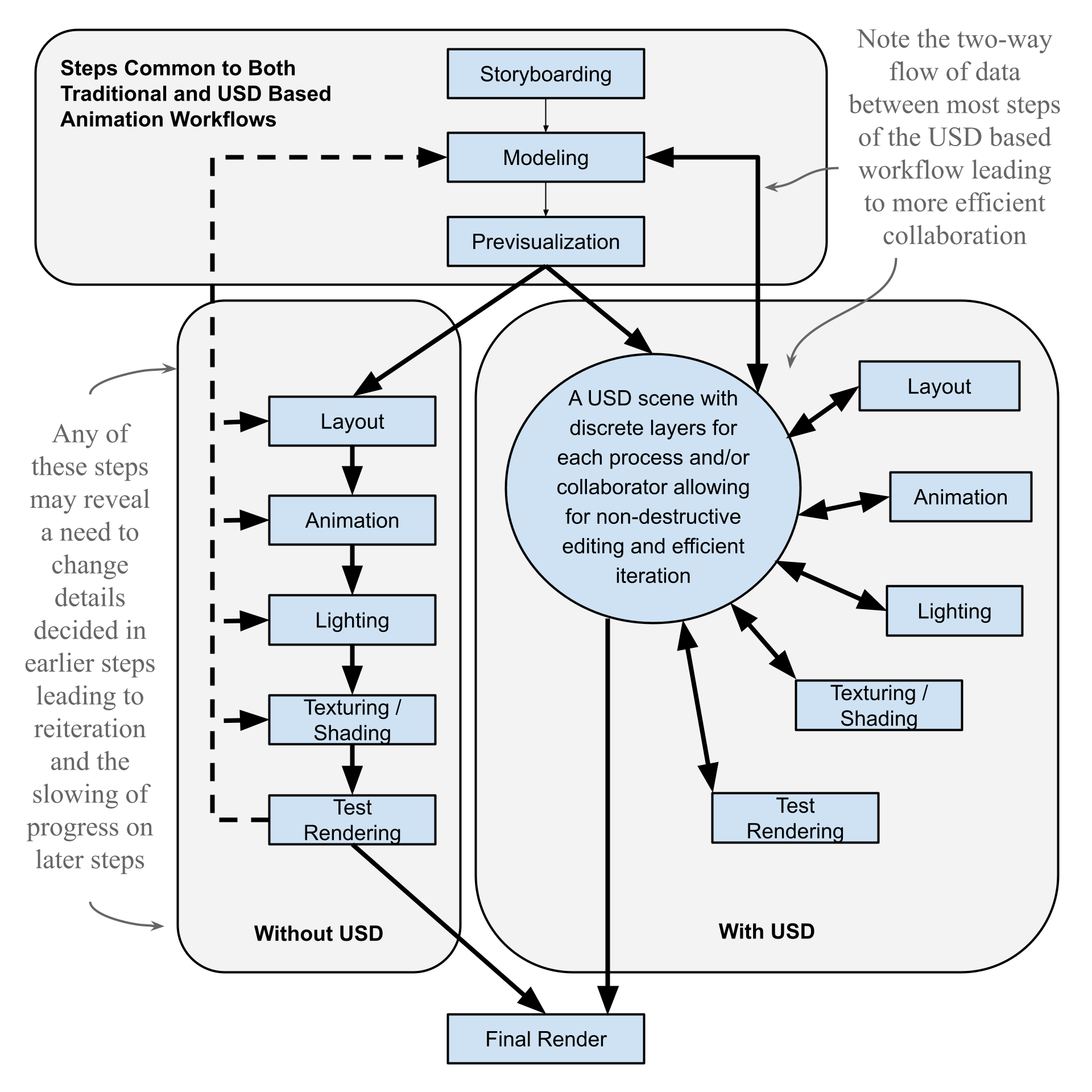

A good example of these advantages in action would be to revisit the birthplace of USD by exploring the creation of a 3D scene for an animated film. Prior to USD, the production process was full of bottlenecks caused by a reliance on multiple file formats and APIs, as well as, the complexity of managing the iterative development of even a single scene, let alone a whole movie. Figure 5 illustrates the difference between a traditional animation workflow and the more efficient workflow facilitated by USD. It shows how the USD layered system of management and version control can significantly improve efficiency and interactive collaboration in a studio environment.

Both with and without USD, there needs to be a preparatory phase to start the process of building an animated scene. It will begin with the initial story-boarding, after which comes the modeling of assets, followed by the previsualization where a rough idea of which elements will be required in a scene are worked out, as well as their positioning (i.e. characters, props, scenery, cameras, lights, etc.)

It is at this point that the two workflows diverge:

Figure 4:A Comparison of Collaborative Workflows in the Creation of an Animated Scene - With and Without USD

Without USD, the next step after the initial preparatory phase would have to be the layout of the stage where all elements are positioned, followed by the animations of characters, props, or cameras. Only once that was completed would the lighting be applied, after which texturing and shading to get the materials in the scene working well in the lighting, probably followed by some test rendering to check the scene. All of this must happen before the final rendering.

Figure 5:A Comparison of Collaborative Workflows in the Creation of an Animated Scene - With and Without USD

Previously (and currently in some cases), each stage would need to be completed before the next one begins. Further, should something from an earlier stage require alteration, such as the remodeling of a character or a change of layout or lighting to accommodate a new camera move, then the later stages of production might be put on hold until the required changes have been made.

The iterative process that is necessary without USD can be both cumbersome and time-consuming. Project management terminology would label it as ‘waterfall’ development, in contrast to the ‘agile’ development made possible by USD.

With USD, after the initial storyboarding, modeling, and previsualization, the layout artists and animators could be working on the same scene concurrently, each applying changes to their own layer, and occasionally referencing the other’s layer to check for compatibility, perhaps even requesting alterations from the other in a two-way creative flow. Similarly, the lighting artists can refine their layers while the animation is still developing, perhaps even discussing options with the modeler or animator in order to enhance all of their choices. Should some element need alteration, such as some creative decision made during what would previously have been thought of as an earlier stage, like the remodeling of a character, or the swapping out of a prop, this can be done without interrupting the ongoing progress of later stages. Test renders, ahead of the final render, can be run at any point to assess the current state of the scene and inform any adjustments that may be required to achieve the intended outcome.

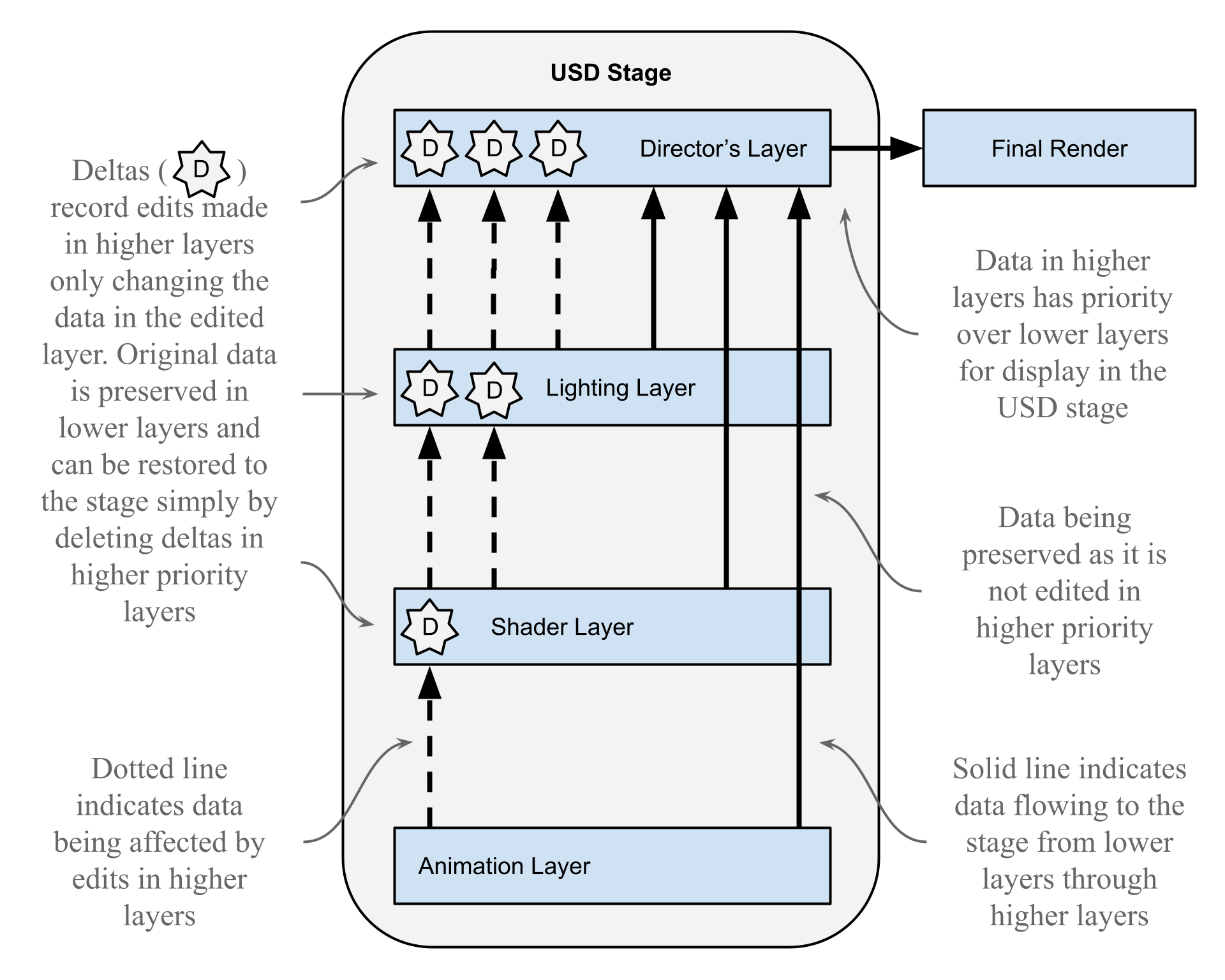

If we were to zoom into the USD stage shown in Figure 4, we can illustrate how the layering system could work in the context of an animated scene. Figure 6 shows the way data can flow from lower layers through higher layers, passing through higher layers where it might be overridden, to ultimately reach the top layer that will be displayed on the actual stage.

Figure 6:Illustrating the layering and delta system in action within an animated scene.

In the example illustrating the collaborative creation of an animated scene, the creative decisions made in lower layers, such as the animator’s or the shader artist’s layer, can make it through the higher layers to influence the state of the final stage. However, users editing higher layers have a higher priority and can override decisions made lower down the layer stack. If that happens then the higher priority changes are what will be displayed in the final stage.

As each change is made, it is recorded as a delta that is saved within the data of the layer that made the change. This enables non-destructive editing in two ways, as deltas can be deleted to restore previous states, or they can remain preserved in their own layer unchanged whilst being overridden by other deltas created in layers higher up the stack with more priority. If the delta in the higher layer is deleted, then the lower layer state will filter through to the final stage. Put simply, any data authored in lower layers will automatically influence higher layers unless it is edited in those higher layers.

We can see this process in action in the following scenario: Let’s imagine that the director of the scene makes an aesthetic decision and they want one of the lights moved. The director could ask the lighting artist to make the change in their own layer, which would be simple enough if they were working together on the same scene in real-time. However, if the lighting artist were not present, the director may opt to move the light in their own layer. The director can make this edit non-destructively, safe in the knowledge that the original position of the light is retained in the data of the lighting artist’s layer. The director’s edit would create a delta in the director’s layer which would record the new position of the light.

Now let’s imagine that later, the director and the lighting artist review the scene and following a discussion, decide that the light was better in its original position. Now, all that needs to be done to restore the scene’s previous state is for the director to delete the delta that was created when they edited the light’s position. This allows the data recording the original position of the light, contained in the lighting artist’s layer to filter through the director’s layer to influence the final scene. Clearly, the inclusion of USD into this kind of workflow can make for dramatic increases in efficiency, as well as engendering a shared creative experience for all collaborators, allowing the whole scene to reach the final render stage much sooner. It goes a long way to explaining Pixar’s prolific output of feature-length animated movies over recent years.

1.4.2 Beyond Entertainment¶

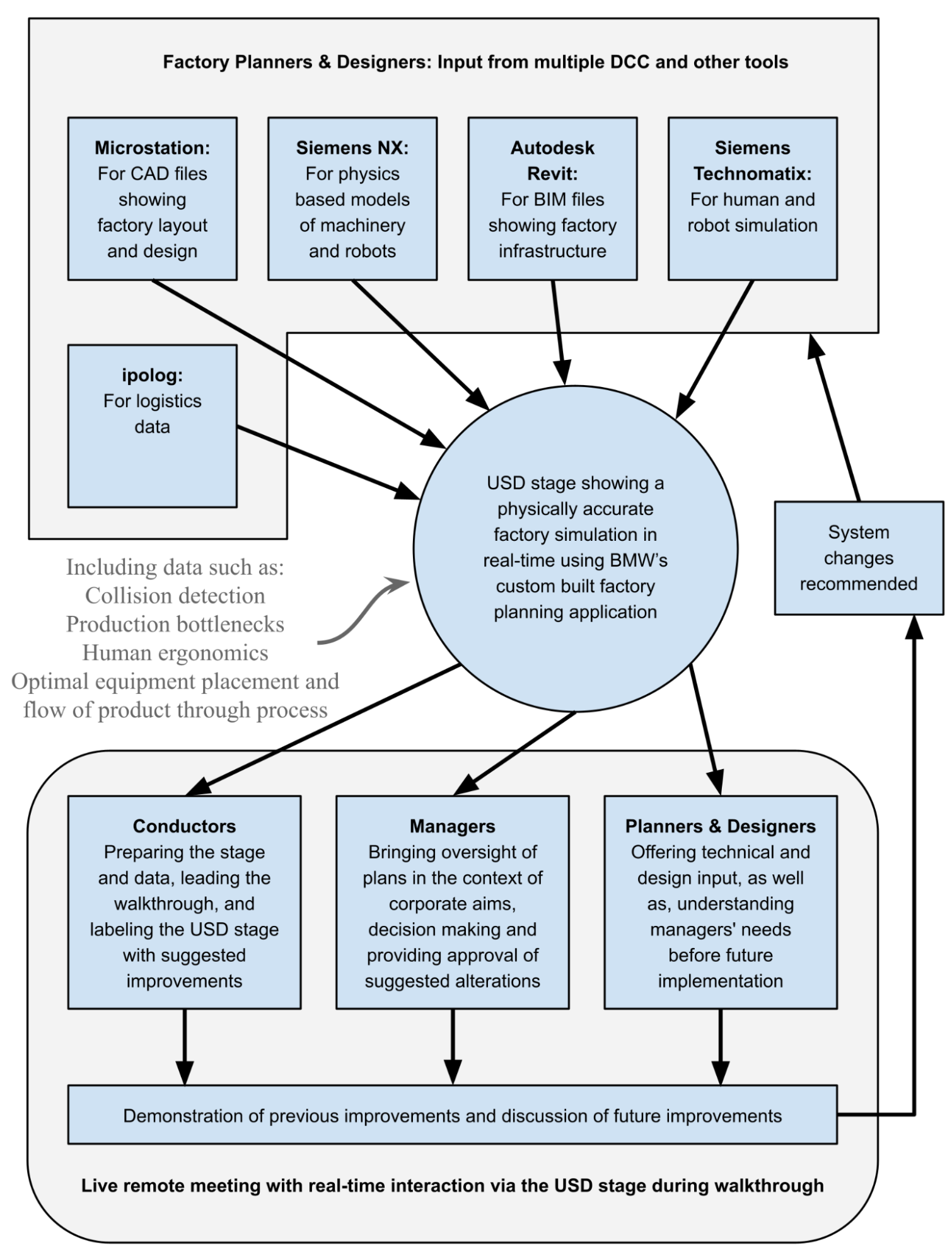

3D digital content creation (DCC) is no longer the sole province of the entertainment industries of animation, film and gaming. Prominent industrial producers are also capitalizing on its potential for simulating production facilities or logistical networks. The life-like digital simulation of a physical object, process or system for the purpose of analysis and optimization, has become known as a Digital Twin. "In a digital twin, real-world objects are replicated virtually, allowing companies to simulate changes before applying them. An excellent example of how USD is playing a central role in the development of digital twins is BMW’s virtual factory planning. Building digital twins has enabled them to test their factory floors for efficiency before making costly physical adjustments. They can even design, build and test entire production lines virtually before they even begin to build them physically.

1.5 Embracing the Future¶

Providing an environment for creative and technical teams to collaborate more efficiently, makes USD perfectly situated to integrate with many other technologies because of the needs of science, manufacturing, logistics, and the entertainment industry during the dawning age of the metaverse.

Central to many of these use cases will be real-time rendering. USD’s lightweight, scalable data structure and efficient data streaming capabilities make it well-suited for real-time rendering pipelines. Beyond the giant industries of gaming and film-making, there will be many other forms of interactive media that will require real-time rendering, including VR/AR, simulations for scientific, medical, or educational purposes, data visualization, digital twins, architectural or automotive presentations, prototyping and design, interactive advertising and even art exhibitions.

A great example of a prominent company adopting USD is Apple. Their primary AR/VR product, the Vision Pro is now using USD to provide AR functionality in place of custom code. One interesting and creative application for USD implementation in the VisionPro is the live AR rendering of models as they are being created in Blender, achieved by constantly exporting a zipped USD file (.usdz) from Blender to the headset, something made possible by the lightweight nature of USD. This method of using USD for viewing a real-time AR rendering of an object in situ is already being implemented by online shopping websites (Shopify), enabling customers to experience a product in 3D, in any position in their own home before purchasing. If this form of product previewing becomes popular, the demand for 3D-aware artists and technicians, able to utilize USD, is likely to expand massively.

Soon, real-time rendering will be integrated into many aspects of modern life enabling the development of many new forms of interactive media and applications, making USD uniquely placed to facilitate this advance.

As discussed in the previous section, another rapidly expanding arena is that of digital twins. As OpenUSD integrates well with the Internet of Things (IoT) and helps to facilitate real-time rendering, it has already been applied by Amazon Robotics, Siemens, and BMW group to develop and test production lines and warehouse logistics in a way that has never been possible before. The use cases for digital twins will undoubtedly grow far beyond these early large-scale industrial models to include other big projects such as town planning, autonomous vehicle fleet management, and environmental modeling. As internet-connected sensors become smaller and cheaper the data they provide will facilitate smaller and smaller use cases for digital twins, tailored more to individuals and smaller businesses than industries and governments, such as twinning of one’s home or car, perhaps even our own bodies.

Scientists developing Earth-2, a simulation studying and forecasting global environmental patterns by using advanced accelerated computing, turned to NVIDIA’s USD-specific platform Omniverse to provide exceptionally detailed visualizations of their models. It is USD’s ability to handle large-scale models and huge amounts of data that have made it the right choice for this vital project.

Robotics research and embodied AI pioneers, Boston Dynamics have incorporated USD to provide simulated environments, helping to develop reinforcement learning policies that improve the function of their robots. At the time of writing, they are planning to release a kit that will employ USD, for experimentation by the wider robotics research community.

The world of medicine is also exploring 3D simulation, and training robots to perform surgical procedures. A recent project called Orbit-Surgical has incorporated OpenUSD into its workflow, utilizing the efficiency of the file format to vastly increase frame rates of simulations for improved accuracy.

Telecommunications giant Ericsson has already begun to use USD to create city-scale digital twins that enable engineers to accurately model 5G networks prior to deployment. Previously, this process required planning using simplified models that found it difficult to predict some parameters, such as the mobility of users. This was followed by physical deployment and testing of the resulting signal quality at multiple locations, a relatively slow and expensive procedure. Since the development of their digital twins using USD’s scalability and efficiency, Ericsson’s engineers have been able to simulate deployments and view signal quality at any location in the city, in real time. With the added capability of USD in VR, it is possible for engineers to be fully immersed in the model, able to view signal pathways and beams radiating around the city, interacting with each other, as well as, physical terrain and trees, something not possible in real life. The benefits of USD’s efficiency in bringing real time rendering and swift export to VR tools have resulted in a much more rapid deployment and optimization of networks.

As so many industries embrace the potential of the metaverse, AI-driven design, and real-time 3D rendering, OpenUSD emerges as a vital tool for visualizing and interacting with this evolving landscape. By gaining proficiency with this framework, developers and technical artists can unlock new realms of possibility, helping to shape the future of industry, science, medicine, digital content creation, and immersive experiences.

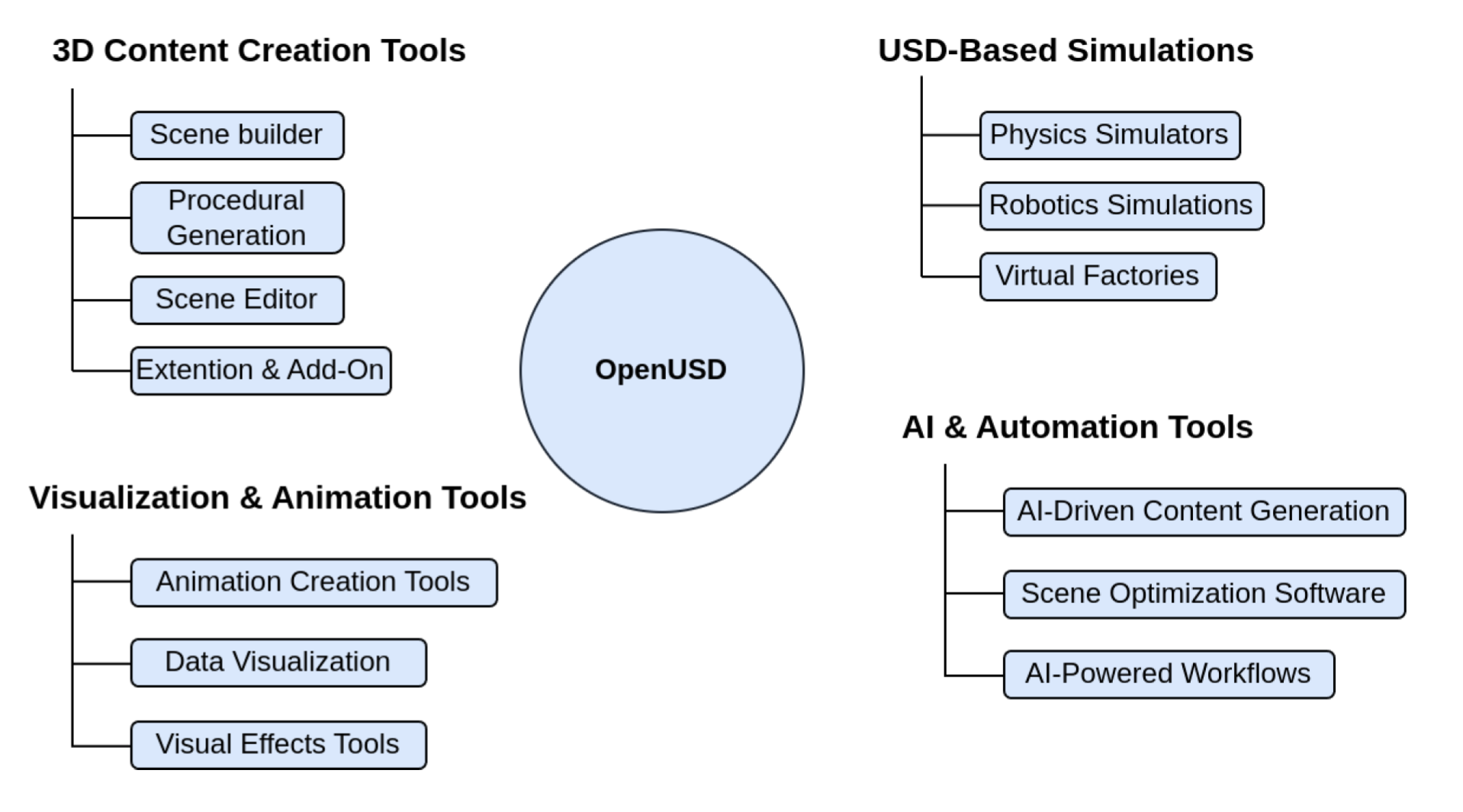

Learning Python scripting for OpenUSD unlocks the ability to develop versatile tools for 3D content creation, simulation, and visualization see Figure 6. This includes applications for assembling and editing complex scenes, procedural generation, interactive editors, and animation tools. You can create physics simulations, robotics applications, and virtual training environments, utilizing OpenUSD’s strengths in physical simulation and scene representation. AI-driven tools are also possible, automating tasks like content generation, scene optimization, and simulation control for industries such as film, gaming, and robotics.

Figure 7:Overview of Software Development Possibilities with OpenUSD. This overview summarizes potential software applications developed with OpenUSD, including 3D content creation, simulations, visualization, and AI-driven tools.

Clearly, there is a huge scope for technical creatives to play a role in any of these fields and we hope this book will reveal the potential for working with OpenUSD, making it more accessible to those approaching it for the first time. By teaching in a step-by-step way, the following chapters will take you through the core elements of the framework, its language, modules, and hierarchical conventions by sharing practical Python code examples. We will begin with the very basics of introducing objects into a scene, then gradually increase the complexity until you feel able to work with physics simulations and visual effects, integrate AI into your workflow, and even create your own extensions or plugins.

Summary¶

- OpenUSD is a unified language and programming framework designed for streamlining and enhancing 3D workflows. OpenUSD uses stages, layers, prims, attributes, and relationships to efficiently represent complex 3D scenes.

- A set of operators (known as arcs) such as variants, references, payloads, and sublayers allows for flexible and efficient management of complex 3D scenes by enabling modular asset assembly, version control, and hierarchical organization.

- OpenUSD is built to enable seamless interoperability across diverse data sources. With a growing number of tools already integrated, its adoption continues to expand.

- OpenUSD has the potential to become a central technology for real-time rendering, supporting diverse applications such as digital content creation, gaming, AR/VR,

Reference¶

- Pixar’s USD GitHub Repository: https://

github .com /PixarAnimationStudios /USD - OpenUSD (AOUSD) Official Site: https://openusd.org

- USD Overview on Pixar’s Website: https://

graphics .pixar .com /usd/ - Awesome OpenUSD: https://

github .com /matiascodesal /awesome -openusd